| "The

computer ... can give you the exact mathematical design, but what's

missing is the eyebrows." — Frank Zappa |

.

.

Gandalf is a communicative humanoid capable of real-time conversation with human users. Gandalf's embodiment is a voice, a hand and a face, which appear on a small monitor in front of the user. To Gandalf's left is a large-screen display on which he can show a model of the solar system. Gandalf is an expert in the solar system, and users can ask him questions about and travel to the planets using natural speech and gesture.

Gandalf is implemented in an architecture called Ymir, a computational model of human psychosocial dialogue skills. Gandalf's behavior rules for human face-to-face conduct come directly from the psychological literature on human-human interaction. They are modelled according to the Ymir's structure, which is based on a very modular, expandable approach, making it easy to do modifications and do experimentation with various mental processes and behavior "rules".

To construct Gandalf's dialogue skills, data from the psychological and linguistic literature was modeled in Ymir's layered, modular structure (cf. McNeill 1992, Rimé & Schiaratura 1991, Clark & Brennan 1990, Pierrehumbert & Hirschberg 1990, Groz & Sidner 1986, Kleinke 1986, Goodwin 1981, Duncan 1989, Sacks et al. 1974, Yngve 1970, Effron 1941). This enables Gandalf to participate in collaborative, task-related activities with users, perceiving and manipulating the three-dimensional graphical model of the solar system: Via natural dialogue a user can ask Gandalf can manipulate the model (zoom in, zoom out), travel to any of the planets, and tell about them.

What

is Gandalf Capable of?

What

is Gandalf Capable of?The capabilities of Gandalf are summed up in the following nine points.

Gandalf’s perception extracts the following kinds information from a conversational partner’s behavior: (1) Eyes: Attentional and deictic functions of conversational partner, during speaking and listening. (2) Hands: Deictic gesture — pointing at objects, and iconic gesture illustrating tilting (in the context of 3-D viewpoint shifts). (3) Vocal: Prosody — timing of partner’s speech-related sounds, and intonation, as well as speech content — words produced by a speech recognizer. (4) Body: Direction of head and trunk and position of hands in body space. (5) Turn-taking signals: Various feature-based analyses of co-dependent and/or co-occurring multimodal events, such as intonation, hand position and head direction, and combinations thereof. These perceptions (1-5) are interpreted in context to conduct a real-time dialogue. For example, when Gandalf takes turn he may move is eyebrows up and down quickly and glance quickly to the side and back (a common behavior pattern to signal turn-taking in the western world) in the same way as humans (typically 100-300 msec after user gives turn). This kind of precision is made possible by making timing a core feature of the architecture. Gandalf will also look (with a glance or by turning his head) in the direction that a user points. The perception of such gestures is based on data from multiple modes; where the user is looking, shape of the user's hand, and data from intonation. The result is that Gandalf's behavior is highly relevant to the user's actions, even under high variability and individual differences.

Gandalf uses these perceptual data and interpretations to produce real-time multimodal behavior output in the all of the above categories, with the addition of: (6) Hands: Emblematic gesture — e.g. holding the hand up, palm forward, signaling “hold it” to interrupt when the user is speaking, and beat gestures — hand motion synchronized with speech production. (7) Face: Emotional emblems — smiling, looking puzzled, and communicative signals — e.g. raising eyebrows when greeting, blinking differently depending on the pace of the dialogue, facing user when answering questions, and more. (8) Body: Emblematic body language — nodding, shaking head. (9) Speech: Back channel feedback. These are all inserted into the dialogue by Gandalf in a free-form way, to support and sustain the dialogue in real-time. While the perception and action processes are highly time-sensitive and opportunistic, the Ymir architecture allows Gandalf to produce completely coherent behavior.

Multimodal interaction is a complex phenomenon, and even more complex is the task of modeling human cognitive capabilities. Here are a few questions and misconceptions that people have expressed when confronted with Gandalf.

QUESTION #1: Is Gandalf's behavior is "scripted"?

No. Not in any normal sense of that word. Gandalf uses compositional algorithms at multiple levels of granularity to "compose" continuous responses to continually streaming perceptual events. I describe this in my paper A Mind Model for Communicative Creatures and Humanoids and on the Ymir page. Any life-like system must be very responsive to its surroundings to seem alive from the outside; other systems, using impoverished perceptual mechanisms (see below), will seem life-less and scripted even if they have an increadibly complex mechanism behind their behavior. This is because the hallmark of intelligent behavior is not the ability to play chess or uttering grammatically perfect sentences, but the ability to be responsive to the environment.

QUESTION #2: Is Gandalf's behavior "simply selected from a library of stock responses"?

No. The multiple temporal levels of feedback (see Question #1), the separately controlled multiple modes, and the compositional rules for how to coordinate and combine these, make Gandalf never repeat the same exact behavior twice. Gandalf is capable of multimodal output, and the real-time combination of all its modes results in practially infinite combinations of gesture, gaze, facial expression and speech. Even within a single mode, like speech, Gandalf uses a large collection of grammatical and knowledge-based patterns to respond with in various combinations depending on what the user has said before. This is described in Chapters 7, 8 and 9 of my thesis.

QUESTION #3: Would Gandalf cease to operate without the heavy body tracking gear that allows him to perceive the world?

No. But he wouldn't be as life-like. While computing power currently restricts Gandalf from composing realistic responses at the fastest timescales (less than 1 second) without the body tracking technologies, recent advances in computing power will allow a complete replacement of body tracking equipment with camears. This has been partially shown in our research paper The Power of a Nod and a Glance.

QUESTION #4: Isnt' using body tracking "cheating"?

No. While low-level perception problems such as movement analysis, figure-ground separation, blob detection, object recogntion can be avoided by using body tracking, high-level perception is an equally difficult, and important problem. Gandalf does a whole lot of high-level vision and multimodal perception. If we had to wait until computer vision has reached a more mature stage with low-level analysis, it would not be possible to work on a number of other hard problems relating to interaction, such as dialogue control, high-level perception, contextual emotional emblems, and task-oriented face-to-face collaboration.

QUESTION #5: When can I have one?

[answer updated:2008] Not yet. While the Japanese are busy building humanoid robots, they cost as much as an expensive luxury car and do about as much as an advanced washing machine (and your clothes still need washing!). Gandalf was built using 8 workstations (expensive computers at the time), and, although the software that ran it could be run on a regular home computer today, the sensory aparatus that allowed Gandalf to be highly responsive and relevant it its behaviors is still far from perfected. Until we have more sophisticated computer vision and hearing systems, Gandalf and his likes will be confined to the laboratory.

A machine capable of taking the place of a person in face-to-face dialogue needs a rich flow of sensory data. Moreover, its perceptual mechanisms need to support interpretations of real-world events that can result in real-time action of the type that people produce effortlessly when interacting via speech and multiple modes.

To enable Gandalf to sense people, the user has to dress up in somewhat bulky equipment: A suit that tracks the upper body [Bers] and gives him a "stick-figure, x-ray" view of the user; an eye tracker that enables him to see one eye of the user, and a microphone, which allows him to understand the words and the intonation (melody) of the user's speech. To make sure the massive data stream from this equipment is available fast enough for Gandalf to respond in real-time, two PCs are used to crunch the body suit and eye tracker data, one SGI is used for speech recognition and one Macintosh is used for intonation and prosody analysis. The accuracy and speed of the data needed for Gandalf is such that this equipment is unlikely to be replacable within less than 5-7 years [written in 1999].

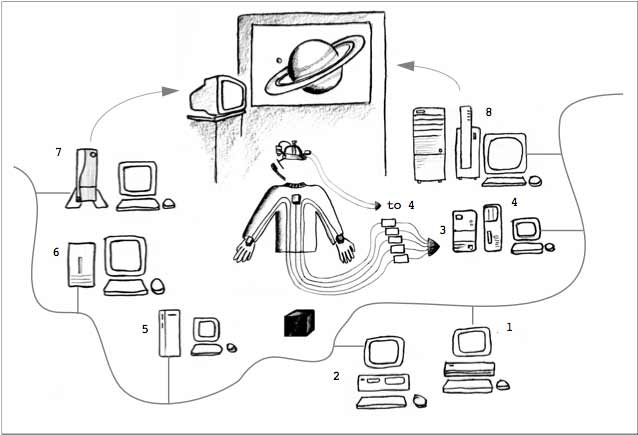

Gandalf system layout figure Gray arrows show display connections; gray line is Ethernet. Eye tracker (video and magnetic sensor outputs) is connected to computer 4 via a serial port and the data is fed to computer 3 via another serial connection; output from the magnetic sensors on the jakcet is connected to computer 3 via serial connections (dark arrowheads). Microphone signal is split to two computers (5 & 6) via an audio mixer (connections not shown). A DECtalk speech synthesizer is connected to computer 7 through a serial port (not shown). Black cube (connected to computer 3) generates the magnetic field needed for sensing the posture of user's upper body [Bers]. "Now, is all this really necessary?", you may ask. The answer is "Yes, absolutely!" and "No, not really." For a prototype system this is nowhere near being an overkill for the stated goal of the system, which is to replace a person in a two-person conversation with an artificial character without changing the interaction. With some work, however, this equipment, and the whole system, can be simplified significantly. This would take some time, even though there are no difficult scientific issues to be solved in this simplification. |

The Gandalf demo relies on the following hardware equipment: Computer System

Output System

Input System

|

Gandalf's

Embodiment: The "ToonFace" Animation Framework

Gandalf's

Embodiment: The "ToonFace" Animation FrameworkTo animate Gandalf's face, I created a cartoon-style animation system that I call ToonFace. The ToonFace system takes an object-oriented approach to graphical faces and easily allows for rapid construction of whacky-looking characters. Rendering these faces in real-time is very simple and lightweight, which is important for real-time interactive characters. Here are some examples of other faces and facial expressions generated automatically with ToonFace:

This animation scheme allows a controlling system to address a single feature on the face, or any combination of features, and animate them smootly from one position to the next (i.e. this is not morphing; any conceivable configuration of any movable facial feature can be achieved instantly without having to add "examples" into an exponentially expanding database). The system employs the notion of "motors" that operate on the facial features and move them in either one or two dimensions.

The ToonFace animation mechanism has been duplicated in Java by researchers at the Fuji-Xerox Palo Alto Research Laboratories.

The CWI - Stichting Mathematical Institute in Amsterdam used ToonFace principles to build a suit of new animation software. From 1998 to 2004 their spinoff EPICTOID developed a suite of animation tools based on the ideas.

Thórisson, K. R. (1996). ToonFace: A System for Creating and Animating Cartoon Faces. M.I.T. Media Laboratory, Learning & Common Sense Section Technical Report 1-96. [PDF]

See also paper

titled "Layered Modular Action Control for Communicative Humanoids"

[go there]

ToonFace has now become a new specification for animated faces, hosted

at MINDMAKERS.ORG [go

there]

| Ymir and Gandalf are the main topics of my thesis, whose chapters can all be downloaded. | Additionally I discuss reactive architectures in dialog via my J.Jr. agent, and general issues in intelligent interface agent design. |

|

| Thórisson, K. R. (1999). A Mind Model for Multimodal Communicative Creatures and Humanoids. International Journal of Applied Artificial Intelligence, 13(4-5): 449-486. | Main architectural overview paper. Describes the underlying architecture, Ymir, used to control Gandalf. This is the crux of my thesis. | |

| Thórisson, K. R. (1998). Real-Time Decision Making in Multimodal Face to Face Communication. Second ACM International Conference on Autonomous Agents, Minneapolis, Minnesota, May 11-13, 16-23. | Describes the decision mechanisms in the Ymir architecture used to control Gandalf, and some of the rules behind Gandalf's behaviors. | |

Thórisson, K. R. (1997). Layered Modular Action Control for Communicative Humanoids. Computer Animation '97, Geneva, Switzerland, June 5-6, 134-143. |

Details the animation and planning control mechanisms in Ymir, as implemented in Gandalf. | |

| Thórisson, K. R. (1997). Gandalf: An Embodied Humanoid Capable of Real-Time Multimodal Dialogue with People. First ACM International Conference on Autonomous Agents, Mariott Hotel, Marina del Rey, California, February 5-8, 536-7. | Short overview of the Gandalf system itself and describes the demo setup. | |

| Thórisson, K. R. & Cassell, J. (1996). Why Put an Agent in a Body: The Importance of Communicative Feedback in Human-Humanoid Dialogue. Lifelike Computer Characters '96, Snowbird, Utah, October 5-9. | Describes results from experiments showing the importance of what some might call 'subtleties' in social dialogue. Describes how the Gandalf prototype incorporates these and the justificatino for using an architecture that allows for such features. | |

| Thórisson, K. R. (1996). ToonFace: A System for Creating and Animating Cartoon Faces. M.I.T. Media Laboratory, Learning & Common Sense Section Technical Report 1-96. | Describes the animation framework for rendering Gandalf in real-time. ToonFace is now a specification for "simplest" case facial animation. | |

| For more information, see my selected publications |

[ Back to Thórisson's home page ]

Copyright 2005 K.R.Th. All rights reserved.