home > research > emonic environment > interfaces > emonator

3.14.2003

Overview

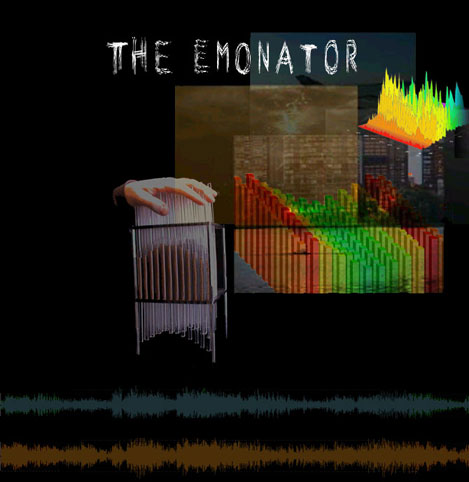

The

Emonator is one of the interfaces - and the most versatile at that - used to provide control input for the Emonic Environment, a real-time improvisational media system. Originally, the Emonator was conceived as a combination of software and hardware with the aim of exploring the concept of emons

as the building blocks for aesthetic expression, content delivery, and

information exchange. The Emonator was designed in collaboration with Dan Overholt, on whose page you can find details of the hardware design (Dan also later adopted the name MATRIX to refer to the device in his own, independent work).

The rationale for the design of the Emonator was to generate emons of varying complexity and forms by interpreting

pressure-like hand gestures.

The

Emonator is one of the interfaces - and the most versatile at that - used to provide control input for the Emonic Environment, a real-time improvisational media system. Originally, the Emonator was conceived as a combination of software and hardware with the aim of exploring the concept of emons

as the building blocks for aesthetic expression, content delivery, and

information exchange. The Emonator was designed in collaboration with Dan Overholt, on whose page you can find details of the hardware design (Dan also later adopted the name MATRIX to refer to the device in his own, independent work).

The rationale for the design of the Emonator was to generate emons of varying complexity and forms by interpreting

pressure-like hand gestures.

The Emonator can serve as a multipurpose application for interactive

control of music and video, inviting users to create aesthetic experiences

using emotionally expressive gestures (particularly when used within the Emonic Environment). The aim is to allow everyone to

be a composer / DJ / video editor without having to learn a complex system

of mappings. Traditional performance instruments force the user into predefined

ways of creating emotional expression (as well as existing electronic

instruments). It is our goal to create a truly new and adaptive interface,

which will acknowledge the natural ways of emotional expression for creating

an aesthetic experience. This way we hope to make the Emonator into an affective performance instrument that will allow you to 'play' your feelings without having to learn a complex

system of mappings.

Currently we are developing a line of applications that allow the Emonator

to interpret gestures for a dynamic creation of music and video. The music

controller provides three levels of music control - an audio shaper, a

pattern composer, and a pattern navigator(not yet functional). The video

controller allows users to navigate through and mix multiple live video

streams (currently limited to 4).

The device hardware consists of a 12x12 array of closely positioned spring

loaded plexiglas rods. The position of each rod (known to a 7-bit precision)

is sensed using a pair of infrared light detectors and is interpreted

using the underlying FPGA-based motherboard. Each rod's position is then

transmitted to a connected PC, which uses these signals to generate music

and video. We also plan to provide an additional layer of action feedback

by using computer-controlled RGB-colored lights that will shine through

the plexiglas rods. Full details about the hardware can be found

in Dan's thesis

(PDF 26MB).

Data Gathering and Analysis

The Emonator project serves two purposes in the overall development of

the Emonic Environment:

At the first stage, we are using it as a measurement / analysis

interface for premade emotional musical patterns (emons). A user is introduced

to the Emonator application and asked to tangialize (move from the auditory

to the physical domain) a series of emons (short musical phrases) that

are played for him. The data from multiple subjects gathered above (pressure,

speed, movement patterns, etc) is then analyzed to derive common physical

patterns of response to each of the emons. Along with providing interesting

scientific results (for interpretation of affect), this will also allow

us to refine the Emonator's performance.

We hope that the Emonator will allow us to check the hypothesis that a

person can take a coherent control of real-time sources of affect, and

orchestrate a unique experience corresponding to their emotional state.

The experimental data gathered at the first stage will allow us to create

mappings that will be used by the performer in the creation of a free

form musical / visual structure. Rather than relying on a user's expertise

and performing ability, the Emonator will give its user the freedom of

emotionally natural tangible expression.

The Emonator was the first of the Emonic Environment's components developed; now there are quite a few more. It is our hope that the Emonator will provide empirical evidence for the

validity of our composition approach and techniques, and will help us

increating large-scale art environments (such as the one described in

the EmoWall proposal). It is our small-scale

attempt at creating a coherent emotion-adaptive system of interactions

between the Emonic Environment and the visitor. The Emonator has also

been used as a controller in a museum installations.

Media

A few of the planned musical modes of Emonator's operation (this is quite old):

active movement, relaxed movement, shift between modes, loop-based mode, a complete piece (2.2MB).

Preliminary videos showing the MATRIX performing real-time Granular Synthesis

over the Internet:

If you are on a high speed connection, try the QuickTime: video

I, video

II, video

III. (10-23MB files).

If you are on a slow connection try the Windows Media copy: video

I, video

II, video

III (1-4MB files).

NEW: a gesture-based application using all 12 rows (144 rods) (update soon).

Project Status

Current Emonator version: 0.9. Stay tuned.

Please e-mail your questions to pauln at media.mit.edu

Credits

The Emonator began as a collaborative project between the

Interactive Cinema and Hyperinstrument groups at the MIT Media Lab.

We would like to thank professors Glorianna Davenport and Tod Machover for their support.

Data conversion, MIDI, and network applications: Paul Nemirovsky

Design of the MATRIX interface & Granular Synthesis shown in videos: Dan Overholt

Vocalator synthesis application & help with SuperCollider, MAX, and network programming: Tristan Jehan

Data analysis, video applications, & MATRIX construction for Emonator project: Andrew Yip

Help with data analysis & network libraries: Ali Rahimi

Logos design: Shirley Waisman

Granular synthesis videos shot/edited by Ali Mazalek and Dan Overholt

* The interface idea was originally conceived jointly by Paul Nemirovsky and Dan Overholt.

Although the interface was initially called the Emonator, the project later separated into two main directions:

(1) The Emonator project uses the MATRIX interface to explore the concept of emons as building blocks for aesthetic expression and audiovisual content creation;

(2) The MATRIX project focuses on the interface design and development of musical synthesis and signal processing applications.