Fun with Neural Style Transfer

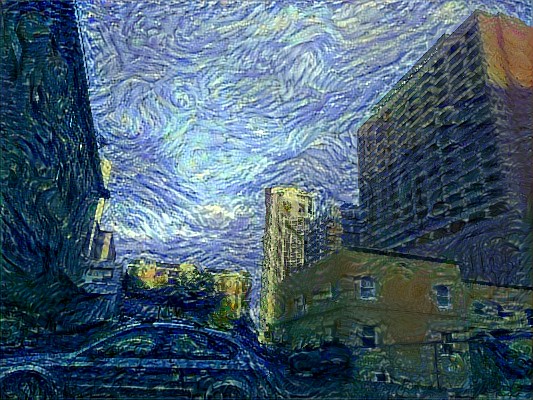

One of most straightforward to implement applications of neural networks, the neural style transfer technique takes two input images and outputs an image that incorporates the 'style' of one of the images and the 'content' of the other. Although it uses deep learning tools, there's no actual learning going on, since one uses an off-the-shelf network that was pretrained for image analysis. The basic idea is to identify an image's content with the highest layer of the network, activated by the most global level of information found in the image. At the same time the style is represented in multiple deeper levels of the network, corresponding to finer and finer spatial scales that define the visual texture. The algorithm balances matching the generated image's activation of the content layer to its activation by the content source image, with matching the generated image's activation of the style layers to their activation by the style reference image.

The code to generate these effects can be found in Deep Learning with Python by François Chollet, listings 8.14 to 8.22. The only modification needed to run the code as presented, aside from specifiying the image to modify and the style reference image, is to replace the deprecated imsave function with imwrite, imported from the imageio library.

My downtown San Diego neighborhood at dusk, in the style of Van Gogh's The Starry Night:

A springtime view down the hill to San Diego Bay, in the style of the London version of Leonardo's Virgin of the Rocks:

The same view not long before sunset New Year's Eve 2019, in the style of Monet's painting of Rouen cathedral at sunset:

Another view shortly after the previous, in the style of El Greco's View of Toledo:

Yet another from the same evening, in the style of Cezanne's Chateau Noir:

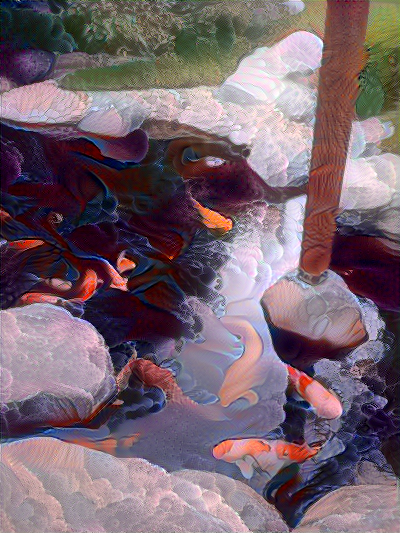

Koi fish in Balboa Park, in the style of Finding Nemo: